Abstract

This report summarizes the technical work of

the Mathematical and Computational Sciences Division of NIST's Information

Technology Laboratory. Included are

details of technical projects, as well as information on publications,

technical talks, and other professional activities in which the Division's

staff has participated.

For further information, contact Ronald

F. Boisvert, Mail Stop 8910, NIST, Gaithersburg, MD 20899-8910, phone

301-975-3812, email [email protected],

or see the Division’s web site at http://math.nist.gov/mcsd/.

Thanks to Robin Bickel for collecting and

organizing the information contained in this report.

Part I - Overview

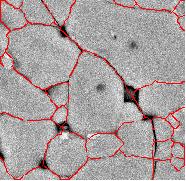

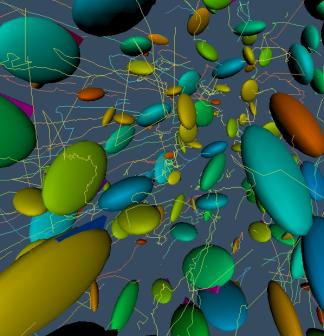

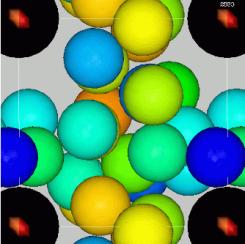

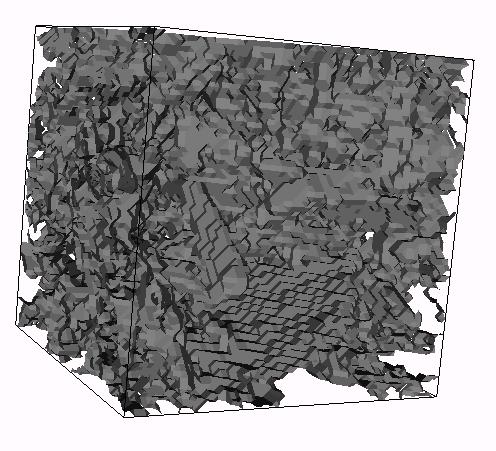

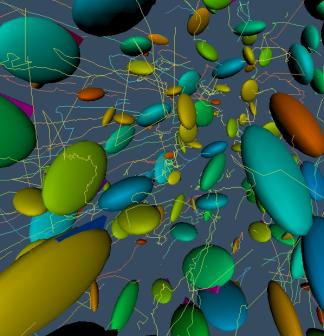

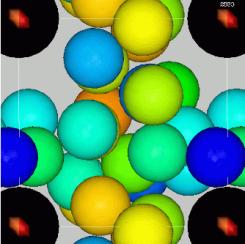

Charge density on a computed

diffusion-limited cluster aggregate.

The

mission of the Mathematical and Computational Sciences Division (MCSD) is

stated as follows.

Provide

technical leadership within NIST in modern analytical and computational methods

for solving scientific problems of interest to U.S. industry. The division

focuses on the development and analysis of theoretical descriptions of

phenomenon (mathematical modeling), the design of requisite computational

methods and experiments, the transformation of methods into efficient numerical

algorithms for high- performance computers, the implementation of these methods

in high- quality mathematical software, and the distribution of software to

NIST and industry partners.

Within the scope of

our charter, we have set the following general goals.

o Insure

that sound mathematical and computational methods are applied to NIST problems.

o Improve

the environment for computational science and engineering research community at

large.

With

these goals in mind, we have developed a technical program in five major areas.

- Applied Mathematics

- Mathematical Software

- High Performance Computing and

Visualization

- Digital Library of Mathematical

Functions

- Quantum Information

The

first area and third areas accomplished primarily via collaborations with other

technical units of NIST, supported by mathematical research in key areas. Projects in the second area are typically

motivated by internal NIST needs, but have products, such as software, which

are widely distributed. This work is also often done in conjunction with external

forums whose goals are to promulgate standards and best practices. The fourth and fifth areas represent large

special projects. These are being done

in collaboration with other ITL Divisions, as well as with the NIST Physics and

Electronics and Electrical Engineering Laboratories. Each of these is described in further detail below.

Our

customers span all of the NIST Laboratories, as well as the computational

science community at large. We have developed a variety of strategies to

increase our effectiveness in dealing with such a wide customer base. We take

advantage of leverage provided via close collaborations with other NIST units,

other government agencies, and industrial organizations. We develop tools with

the highest potential impact, and make online resources easily available. We

provide routine consulting, as well as educational and training opportunities

for NIST staff. We maintain a state-of-the-art visualization laboratory.

Finally, we select areas for direct external participation that are fundamental

and broadly based, especially those where measurement and standards can play an

essential role in the development of new products.

Division

staff maintain expertise in a wide variety of mathematical domains, including

linear algebra, special functions, partial differential equations,

computational geometry, Monte Carlo methods, optimization, inverse problems,

and nonlinear dynamics. We also provide expertise in parallel computing,

visualization, and a variety of software tools for scientific computing.

Application areas in which we have been actively involved in this year include

atomic physics, materials science, fluid mechanics, electromagnetics,

manufacturing engineering, construction engineering, wireless communications,

bioinformatics, image analysis and computer graphics.

In

addition to our direct collaborations and consulting, output of Division work

includes publications in refereed journals and conference proceedings,

technical reports, lectures, short courses, software packages, and Web

services. In addition, MCSD staff members participate in a variety of

professional activities, such as refereeing manuscripts and proposals, service

on editorial boards, conference committees, and offices in professional

societies. Staff members are also active in educational and outreach programs

for mathematics and computer science students at all levels.

In

this section we provide additional background on each of the technical thrust

areas, including their impetus, general goals, and expected long-term

outcomes. The identification of these

areas was part of a NIST-wide effort to identify and document its programs of

work. Details on the technical work

that has been undertaken in each of these areas can be found in Part II.

Impetus. As computing resources become more plentiful

there is increased emphasis on answering problems by "putting problems on the

computer". Formulating the right

questions, translating them into tractable computations, and analyzing the

resulting output, are all mathematics-intensive operations. It is rare for a bench scientist to be

expert both in their primary subject area and in the often deep and subtle

questions of the mathematics that they engender. Thus, NIST needs a sustained cadre of professional mathematicians

who can bring their expertise to bear on the wide variety of mathematics

problems found at NIST. Often, the

mathematics resulting from NIST problems is widely applicable outside, and

hence there is added benefit.

Activities. MCSD mathematicians engage in consulting and

long-term collaboration with NIST scientists and their external customers. They also work to develop requisite

mathematical technologies, including mathematical models, methods and software. The following are examples of such

activities.

o Mathematical modeling of solidification processes

o Monte Carlo methods for combinatorial counting

problems

o Terrain modeling

o Micromagnetic modeling

o Modeling of complex material microstructures

o Modeling of high-speed machining processes

o Development and analysis of image sharpening methods

o Computer graphic rendering of material surfaces

o Computational techniques in bioinformatics

o Mathematical problems in construction metrology

Expected

Outcomes. Improved mathematical

techniques and computational procedures will lead to more effective use of

mathematical and computational modeling at NIST. Areas such as materials science, high-speed machining, and

construction technology will see immediate improvements in methodology. Distribution of related methodology and

tools (including computer software) will allow these benefits to accrue to the

scientific community at large. Examples

of the latter include (1) more widespread study of material science problems

and the development of new technologies characterized by complex material

microstructure, and (2) improvement in the accuracy and reliability of

micromagnetic modeling software.

Impetus. Mathematical modeling in the sciences,

engineering, and finance inevitably leads to computation. The core of computations is typically a

series of well-defined recurring mathematical problems, such as the solution of

a differential equation, the solution of a linear system, or the computation of

a transform. Much mathematical research

has focused on how to solve such problems efficiently. The most effective means of passing on this

expertise to potential customers is by encapsulating it in reusable software

components. Since much work at NIST

relies on such computations, it has a natural interest in seeing that such

components are developed, tested, and made available. The computational science community outside of NIST has similar

needs. Programming methodologies and

tools for developing efficient and reliable mathematical modeling codes in

general, and for developing and testing reusable mathematical software

components in particular, are also of interest.

Activities.

MCSD staff members develop of mathematical

algorithms and software in response to current and anticipated NIST needs.

They are also involved in the development of

standards for mathematical software tools, and in the widespread dissemination

of research software, tools, testing artifacts, and related information to the

computational science community at large. The following are examples of such activities.

o

Numerical computing in Java

o

The Sparse BLAS

o

Parallel adaptive multigrid methods

o

Template Numerical Toolkit

o

Guide to Available Mathematical Software

o

The Matrix Market

Expected

Outcomes. Improved access to

general-purpose mathematical software will facilitate the rapid development of

science and engineering applications. In addition, the availability of

community standards and testing tools will lead to improved portability,

performance, and reliability of science and engineering applications.

Impetus. The most demanding mathematical modeling and

data analysis applications at NIST require resources that far exceed those

routinely found on the scientist's desktop.

In order to effect such computations in a reasonable amount of time, one

must often resort to parallel computers.

The effective use of parallel computers requires that computational

algorithms be redesigned, often in a very fundamental way. Effecting these changes, and debugging the

resulting code, requires expertise and a facility with specialized software

tools that most working scientists do not possess. Hence, it is necessary to

support the use of such facilities with specialized expertise in these areas. Similarly, the use of sophisticated

visualization equipment and techniques is necessary to adequately digest the

massive amount of data that these high performance computer simulations can

produce. It is not easy to become

facile with the use of such tools, and hence specialized expertise in their use

must also be provided.

Activities. MCSD staff members collaborate with NIST

scientists on the application of parallel computing to mathematical models of

physical systems. In addition, they

collaborate with NIST scientists on the application of advanced scientific

visualization and data mining techniques.

They develop and maintain supporting hardware and software tools,

including a fully functional visualization laboratory. MCSD staff members also provide consulting

in the use of applications software provided by the NIST central computing

facility. The following are examples of

activities in this area.

o

Parallelization of Feff x-ray absorption code

o

Parallel computation of the ground state of neutral helium

o

Parallel genetic programming

o

Parallel computing and visualization of the flow of

suspensions

o

Modeling and visualization of dendritic growth

o

Visible cement database

o

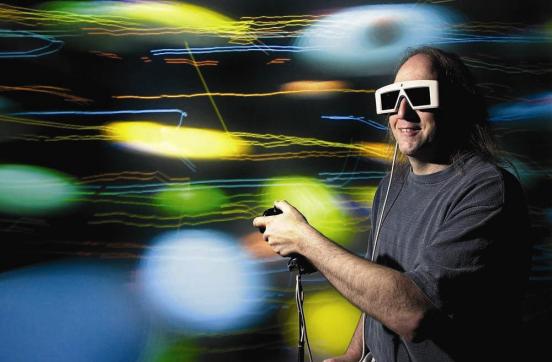

Immersive visualization

Expected

Outcomes. Working closely with NIST

scientists to improve the computational performance of their models will lead

to higher fidelity simulations, and more efficient use of NIST central

computing resources. New scientific

discovery will be enabled through the insight provided by visualization and

data mining. Finally, widespread dissemination of supporting techniques and

tools will improve the environment for high performance computing and

visualization at large.

Impetus. The special functions of applied mathematics

are extremely useful tools in mathematical and computational modeling in a very

wide variety of fields. The effective

use of these tools requires access to a convenient source of information on the

mathematical properties of these functions such as series expansions,

asymptotics, integral representations, relations to other functions, methods of

computation, etc. For more than 35 years the NBS Handbook of Mathematical

Functions (AMS 55) has served this purpose. However, this book is now

woefully out of date. Many new properties of these functions are known, many

new scientific applications of them have come into use, and current

computational methods are completely different than those of the 1950s.

Finally, today there are new and more effective means of presenting the

information: online, Web-based, highly interactive, and visual.

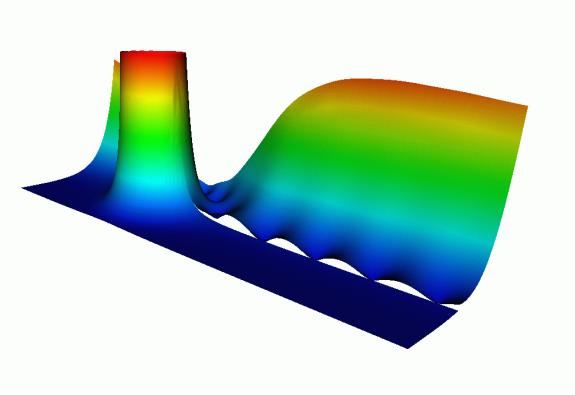

Activities. The purpose of this project is to develop a

freely available, online, interactive resource for information on the special

functions of applied mathematics. With

the help of some 40 outside technical experts, we are surveying the technical

literature, extracting the essential properties of interest in applications,

and packaging this information in the form of a reference compendium. To support the presentation of such data on

the Web, we are developing mathematics-aware search tools, indices, thesauri,

and interactive Web-based visualizations.

Expected

Outcomes. Widespread access to

state-of-the-art data on the special functions will improve mathematical modeling

in many areas of science, statistics, engineering, and finance. The DLMF will

encourage standardization of notations and normalizations for the special

functions. Users of the special functions will have an authoritative reference

to cite the functions they are using, providing traceability to NIST for

standardized mathematical objects.

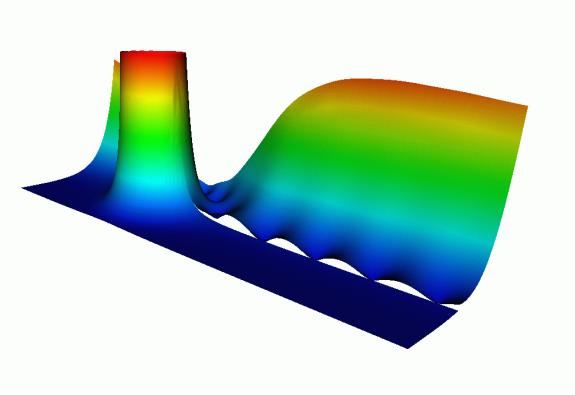

Students Brianna

Blaser (Carnegie Mellon) and Elaine Kim (Stanford) work with

Bonita Saunders on

graphics for the Digital Library of Mathematical Functions.

Impetus. Quantum information networks have the

potential of providing the only known provably secure physical channel for the

transfer of information. The technology

has only been demonstrated in laboratory settings, and a solid measurement and

standards infrastructure is needed to move this into the technology development

arena. Quantum computers have potential for speeding up previously intractable

computations. ITL has been asked to

support the work in the NIST Physics and Electronics and Electrical Engineering

Laboratories to develop quantum processors and memory, concentrating on the

critical areas of error correction, secure protocols, algorithm and tool

development, programming, and information theory.

Activities. This project is an ITL-wide effort with

participants in six Divisions. We are

working to develop a quantum communications test bed facility for the DARPA

QuIST program as part of a larger effort to develop a measurement and standards

infrastructure to support quantum communications. We are further supporting the

NIST Quantum Information program through collaborative research with the NIST

Physics Laboratory related to quantum information theory. Within MCSD we are working on issues related

to the use of quantum entanglement for long-distance communication, the

modeling of neutral atom traps as quantum processors, and the development and

analysis of quantum algorithms.

Expected

Outcomes. We expect that the

development of an open, measurement-focused test bed facility will allow a

better understanding of the practical commercial potential for secure quantum

communication, and serve the development of standardized network protocols for

this new communications technology. By

working closely with staff members of the NIST Physics Laboratory, who are

working to develop quantum processors, we expect that early processor designs

will be more capable and useable.

In

this section we will highlight some of the technical accomplishments of the

Division for FY2001. Further details

can be found in Part II.

Scientific

and engineering data is increasingly being generated in the form of

images. Images produced at NIST are

from a wide variety of sources, from scanning electron microscopes to laser radar. Applications range from combinatorial

chemistry to building construction. The

area of image analysis has blossomed into a significant area of applied

mathematics research in recent years, for which new fundamental mathematical

technologies are continuing to be developed.

MCSD staff members are working on a variety of projects in collaboration

with the NIST Laboratories in which image analysis plays a vital role. Examples of these follow.

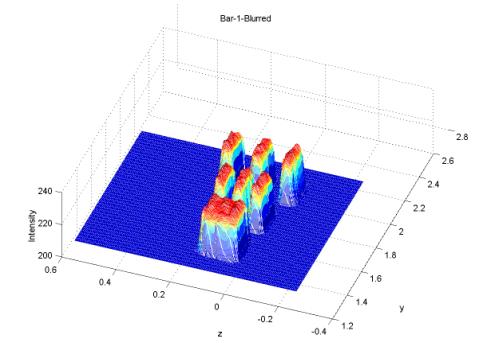

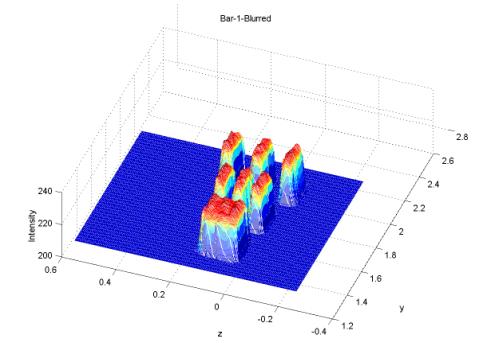

Blind

Direct Deconvolution. Scanning

electron microscopes (SEMs) are basic research tools in many of NIST's programs

in nanotechnology. A major concern in scanning electron microscopy is the loss

of resolution due to image blurring caused by electron beam point spread. The

shape of that beam can change over time, and is usually not known to the

microscopist. Real-time blind deconvolution of SEM imagery, if achievable,

would significantly extend the capability of electron microprobe

instrumentation. Blind deconvolution is a very difficult problem in which ill

conditioning is compounded with non-uniqueness. Most known approaches to that

problem are iterative in nature.

Such processes are typically quite slow, can develop stagnation points,

or diverge altogether. Alfred Carasso of MCSD has developed reliable direct (non-iterative)

methods, in which the fast Fourier transform is used to solve appropriately

regularized versions of the underlying ill-posed parabolic differential

equation problem associated with the blur. When the point-spread function (psf)

is known, Carasso's SECB method can deblur 512x512 images in about 1 second of

CPU time on current desktop platforms. Carasso has recently developed two new

direct blind deconvolution techniques based upon SECB. These methods detect the

signature of the psf from appropriate 1-D Fourier analysis of the blurred

image. The detected psf is then input into the SECB method to obtain the

deblurred image. When applicable, these blind methods can deblur 512x512 images

in less than a minute of CPU time, which makes them highly attractive in

real-time applications. Carasso has

been applying this method with great success to images obtained from NIST

SEMs. The methods are applicable in a

wide variety of imaging modalities in addition to SEM imaging.

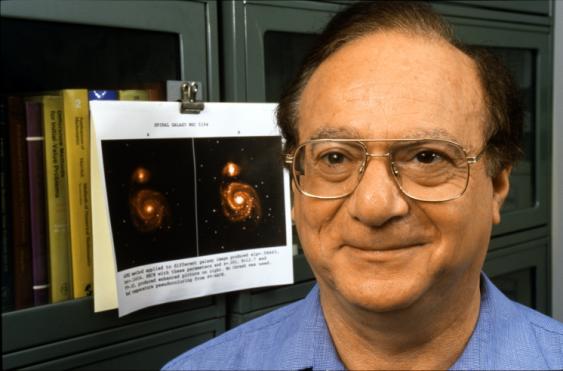

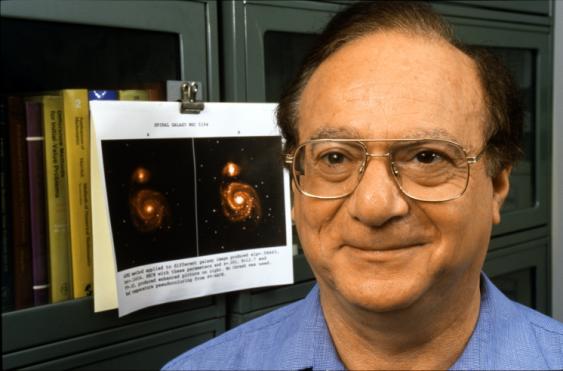

Alfred Carasso has developed a unique highly efficient method for blind

deconvolution of images. This is

currently being used in several applications of electron microscopy at

NIST. The method is more widely

applicable, as indicated by the enhancement of the Whirlpool Galaxy

(M51) image, shown in the photo.

Feature

Extraction, Classification. In

applications like combinatorial chemistry, large sets of such images are

generated which must be processed automatically to identify information of

interest. Isabel Beichl has been working

with the NIST Polymers Division to automatically detect areas of wetness and

dryness in images of polymer dewetting processes, and to generate summary

statistics related to the geometry of each image. Another need is to automatically classify the state of the

dewetting process that each image represents.

An algorithm of Naiman and Priebe based upon importance sampling and

Bayesian statistics is being adapted for this purpose. In a separate effort, Barbara am Ende is

working with the Semiconductor Electronics Division to develop techniques for automatically

detecting and counting lattice planes between sidewalls in High

Resolution Transmission Electron Microscopy (HRTEM) images. This capability is a

key step in the development of precision linewidth standards for

nanometer-level semiconductor metrology.

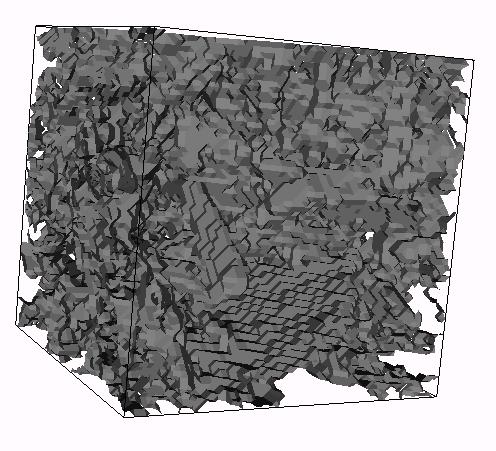

Micrographs

to Computational Models. Image

analysis is the first step in the processing done by the popular OOF software

for analyzing materials with complex microstructure. Developed by MCSD's Stephen Langer in association with staff of

the NIST Material Science and Engineering Laboratory, OOF begins with a

micrograph of a real material with multiple phases, grain boundaries, holes,

and cracks, identifies all the parts, and then generates a finite element mesh

consistent with the complicated geometry.

Material scientists can then use the result to perform virtual tests on

the material, such as raising its temperature and pulling on it. The resulting stresses and strains can then

be displayed. OOF has become a popular

tool in the material science community, and has won internal and external

awards. This year Langer worked with

Robert Jin, a talented intern from Montgomery Blair High School, to developed a

technique for automatically detecting grain boundaries in micrographs. The

algorithm is based upon a modified Gabor wavelet filter and edge linking. This will be incorporated into OOF2, now

under development. OOF2 will include a

variety of new capabilities and will be easier to extend.

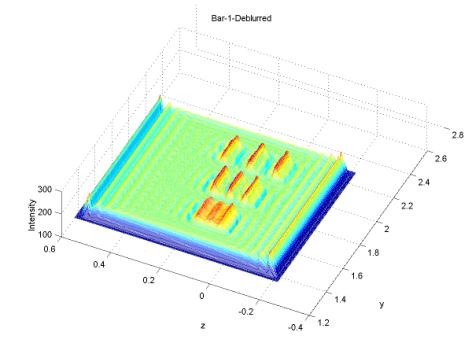

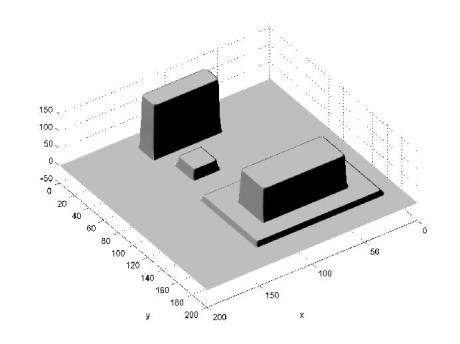

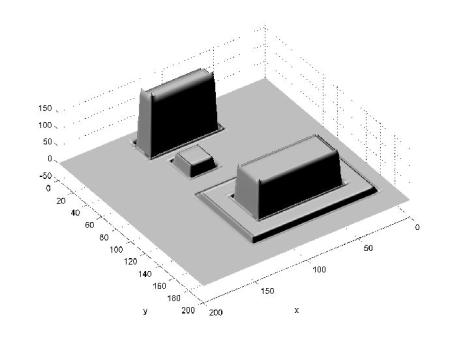

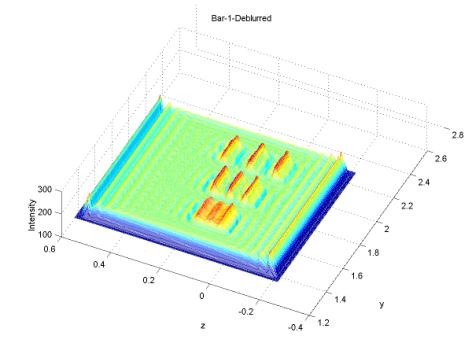

LADAR

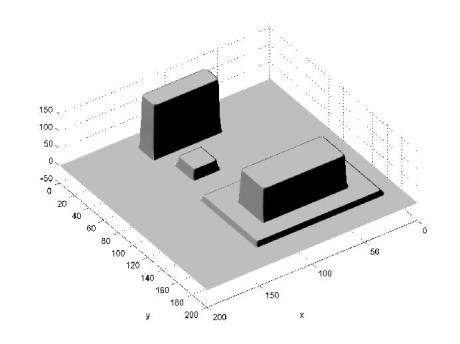

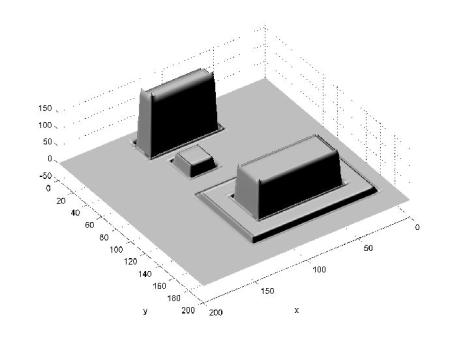

and 3D Imaging. Laser radar (LADAR)

systems provide a relatively inexpensive method for terrain mapping. Such systems can optically scan a given

scene, providing distance and intensity readings as a function of scanning

angle. In principle, such data can be

used to construct a geometrical model of the scanned scene. In practice this remains a very difficult

process. The data is voluminous, noisy,

and full of unnatural artifacts. The

data is one-sided, only providing the view as seen from a particular vantage

point. Hence, to develop a true

three-dimensional model, scans from multiple sources must be registered and the

data fused. Christoph Witzgall has been

working with staff of the NIST Building and Fire Research Laboratory to develop

three-dimensional models of construction sites. With such a model, as-built conditions could be automatically

assessed, current construction processes could be viewed, planned sequences of

processes could be tested, and object information could be retrieved on

demand. Witzgall has developed

techniques for cleaning and registering LADAR data, and extracting a

triangulated irregular network model from it.

These techniques have been tested on applications such as determining

volumes of excavated earth. In a

related effort, David Gilsinn is studying the use of LADAR to read

object-identifying bar codes on remote objects. The reflectance data is noisy and defocused, and Gilsinn is

developing deconvolution techniques to reconstruct bar codes from the LADAR

data. This is challenging since the

LADAR is not a single beam, but rather a collection of multiple sub-beams. Some progress has been made using averaging

filters. A more accurate model for the

convolution kernel is being developed.

Mathematical problems with discrete

components are increasing in frequency at NIST, turning up in applications from

nanotechnology to network analysis.

MCSD staff members have become involved in a variety of these efforts,

and are developing some of the basic technologies to tackle such problems

efficiently. Some examples follow.

Combinatorial

counting problems. Combinatorial

problems arise in a wide variety of applications, from nanotechnology to

computer network analysis. Fundamental

models in these fields are often based on quantities that are extremely

difficult (i.e., exponentially hard) to compute. We have devised methods to compute such quantities approximately

(with known error bars) using Monte Carlo methods. Traditional Monte Carlo methods can be slow to converge, but we

have made progress in significantly speeding up these computations using

importance sampling. In the past few

years Isabel Beichl and colleagues have made progress in evaluating the

partition function for describing the probability distribution of states of a

system. In a number of settings, including the Ising model, the q-state Potts

model, and the monomer-dimer model, no closed form expressions are known for

three-dimensional cases and obtaining exact solutions of the problems is known

to be computationally intractable. We

have developed a class of probabilistic importance sampling methods for these

problems that appears to be much more effective than the standard Markov Chain

Monte Carlo technique. We have used

these techniques to obtain accurate solutions for both the 3D dimer covering

problem and the more general monomer-dimer problem. An importance sampling

formulation for the 3D Ising model has also been constructed. This year, new Monte Carlo/importance

sampling techniques and software have been developed to estimate the number of

independent sets in a graph. A graph is a set of vertices with a set of

connections between some of the vertices. An independent set is a subset of the

vertices, no two of which are connected. The problem of counting independent

sets arises in data communications, in thermodynamics, and in graph theory

itself. For example, it is closely

related to issues of reliability of computer networks. Physicists have used estimates of number of

independent sets to estimate the hard sphere entropy constant. This constant is known analytically in 2D,

but no analytical result is known in 3D.

Beichl, along with Dianne O'Leary and Francis Sullivan have been able to

use their approach to estimate the constant for a 3D cubic lattice. They are

now are working on the case of an FCC lattice.

Bioinformatics. Computational biology is currently

experiencing explosive growth in its technology and industrial applications.

Mathematical and statistical methods dominated the development of the field but

as the emphasis on high throughput experiments and analysis of genetic data

continues, computational techniques have also become essential. We are working to understand the

mathematical issues in dealing with large biological datasets with the aim of

developing expertise that can be applied to future NIST problems. In the process, we are developing techniques

and tools of widespread interest. One

of these is GenPatterns. Fern Hunt,

along with former guest researcher Antti Pessonen, and student Daniel Cardy

developed this program to compute and graphically display DNA or RNA

subsequence frequencies and their recurrence patterns, as well as to creating

Markov models of the data. GenPatterns is now a part of the NIST Bioinformatics/Computational

Biology software website currently being constructed by the NIST the Chemical

Science and Technology Laboratory. More

recently we have turned our attention to the problem of aligning protein

sequences with gaps. Database searches

of protein sequences are based on algorithms that find the best matches to a

query sequence, returning both the matches and the query in a linear

arrangement that maximizes underlying similarity between the constituent amino

acid residues. Very fast algorithms based on dynamic programming exist for

aligning two or more sequences if the possibility of gaps is ignored. Gaps are

hypothesized insertions or deletions of amino acids that express mutations that

have occurred over the course of evolution. The alignment of sequences with

such gaps remains an enormous computational challenge. Fern Hunt and Anthony

Kearsley are currently working with Honghui Wan of NIH to develop an

alternative approach based on Markov decision processes. The optimization

problem then becomes a linear programming problem and it is amenable to

powerful and efficient techniques for solution. We are creating software for

multiple sequence alignment based on these ideas.

Quantum

algorithms. We have

recently begun a project in the area of quantum information science. We are collaborating with other ITL

Divisions and the NIST Physics Laboratory in the development and analysis of

quantum-based systems for communication and computation. One component of this is the study of

algorithms for quantum computers. The

principle advances in this field thus far have been Shor’s algorithm for

factoring and Grover’s algorithm for searching an unordered set, each of which

exhibit significant speedups which are thought not to be possible on classical

computers. A new postdoctoral

appointee, David Song, is working with Isabel Beichl and Francis Sullivan on

quantum algorithms for determining whether a finite function over the integers

is one-to-one. They are constructing a

quantum algorithm for this problem which they hope to show has a complexity of O(SQRT(n))

steps. Classical algorithms require n

steps to do this computation. The

proposed algorithm uses phase symmetry, Grover's search algorithm and results

about the pth complex roots of unity for a prime p.

Concrete

is an essential ingredient of the national civil engineering

infrastructure. Some 6,100 companies

support this infrastructure, with a gross annual product of $35 billion when

delivered to a work site, and over $100 billion when in place in a building. In

recent years there has been a growing recognition of the great potential for

improving the performance of cement and concrete products with the development

of new understanding of the materials and processes. The NIST Building and Fire

Research Laboratory (BFRL) has over two decade's worth of experience in

experimental, theoretical, and computational work on cement and concrete and is

a world leader in this field. MCSD staff members in the Scientific Applications

and Visualization Group have contributed to this effort by working closely with

BFRL scientists in developing parallel implementations of their computational

models, and in providing effective visualizations of their results. Among these are models of the flow of

suspensions, flow in porous media, and the elastic properties of concrete. MCSD contributions have significantly

extended the class of problems that can be addressed by BFRL researchers. Striking visualizations of the results of

these simulations, including immersive visualizations, have also been developed

by MCSD staff. (Examples are included

elsewhere in this report.)

In

January 2001 the Virtual Cement and Concrete Testing Laboratory (VCCTL)

consortium was formed under the leadership of BFRL. The overall goals of the consortium are to develop a virtual

testing system to reduce the amount of physical testing of concrete, expedite

the research and development process, and facilitate innovation. The consortium

has seven industrial members. MCSD is a partner in the effort, and is taking

the lead in visualization and parallelization efforts.

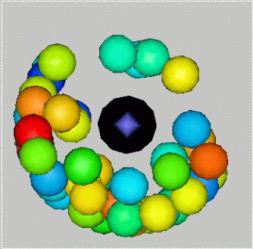

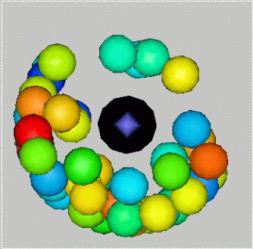

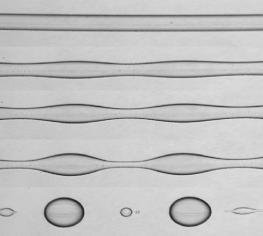

This image shows a volume rendering of a

cement paste sample.

The actual sample is less than one

millimeter wide.

A

popular computer code for X-ray absorption spectroscopy (XAS) now runs 20-30

times faster, thanks to a cooperative effort of MCSD and the NIST Materials

Science and Engineering Laboratory (MSEL).

XAS is widely used to study the atomic-scale structure of materials, and

is currently employed by hundreds of research groups in a variety of fields,

including ceramics, superconductors, semiconductors, catalysis, metallurgy,

geophysics, and structural biology. Analysis of XAS relies heavily on ab

initio computer calculations to model x-ray absorption in new materials.

These calculations are computationally intensive, taking days or weeks to

complete in many cases. As XAS becomes more widely used in the study of new

materials, particularly in combinatorial materials processing, it is crucial to

speed up these calculations. One of the

most commonly used codes for such analyses is FEFF. Developed at the University

of Washington, FEFF is an automated program for ab initio multiple

scattering calculations of X-ray Absorption Fine Structure (XAFS) and X-ray

Absorption Near-Edge Structure (XANES) spectra for clusters of atoms. The code

yields scattering amplitudes and phases used in many modern XAFS analysis

codes. Feff has a user base of over 400 research groups, including a number of

industrial users, such as Dow, DuPont, Boeing, Chevron, Kodak, and General

Electric.

To

achieve faster speeds in FEFF, James Sims of the MCSD worked with Charles

Bouldin of the MSEL Ceramics Division to develop a parallel version,

FeffMPI. In modifying the code to run

on the NIST parallel processing clusters using a message-passing approach, they

gained a 20-30-fold improvement in speed over the single processor code. Combining

parallelization with improved matrix algorithms may allow the software to run

100 times or more faster than current single processor codes. The latter work

is in process. The parallel version of

the XAS code is portable, and is now also operating on parallel processing

clusters at the University of Washington and at DoE's National Energy Research

Scientific Computing Center (NERSC). One NERSC researcher has reported doing a

calculation in 18 minutes using FeffMPI on the NERSC IBM SP2 cluster that would

have taken 10 hours before. In 10 hours this researcher can now do a run that

would have taken months before, and hence would not have been even attempted.

A

large number of MCSD staff members received significant awards this year. Some

of these are highly distinguished awards from external groups, while others are

prized internal awards.

External

Awards. Anthony Kearsley, a MCSD

mathematician, received the Arthur Flemming Award in June 2001. The Flemming

Award is given annually to recognize outstanding Federal employees with less

than 15 years of service. The Flemming Award Commission selects the honorees,

and the award is sponsored by George Washington University and Government

Executive magazine. This year 12 winners were selected from throughout the

federal government, six in the administrative category and six in the science

and engineering category. Kearsley was cited for a sustained record of

contributions to the development and use of large-scale optimization techniques

for the solution of partial differential equations arising in science and

engineering. Noted were his contributions to the solution of problems in such

diverse areas as oil recovery, antenna design, wireless communications, climate

modeling, optimal shape design, and high-temperature superconductors. His tireless

work as a mentor and leading proponent of careers in mathematics for students

at the high school, undergraduate, and graduate levels was also cited. This was the second year in a row that an

MCSD staff member received the Flemming award.

Last year Fern Hunt was among the 12 winners.

Anthony

Kearsley, winner of the 2001 Arthur Flemming Award,

and

Bonita Saunders, 2001 Claytor Lecturer.

Bonita

V. Saunders presented the 2001 Claytor Lecture on January 13, 2001. The

National Association of Mathematicians (NAM) inaugurated the Claytor Lecture in

1980 in honor of W. W. Schieffelin Claytor, the third African American to earn

a Ph.D. in Mathematics, and the first to publish mathematics outside of his

thesis. Founded in 1969, NAM is a non-profit professional organization whose

mission is "to promote excellence in the mathematical sciences and promote the

mathematical development of underrepresented American minorities." Saunders is

the twentieth mathematician to be selected as Claytor lecturer. Previous

honorees include Fern Hunt, also of ITL, David H. Blackwell, the first African

American elected to the National Academy of Sciences, and J. Ernest Wilkins,

who at 19 became the youngest African American to receive a doctorate in the

mathematical sciences. Saunders' lecture, entitled, "Numerical Grid Generation

and 3D Visualization of Special Functions" was delivered at a special session

of the Joint Mathematics Meetings in New Orleans.

Geoffrey

McFadden, Leader of the MCSD Mathematical Modeling Group, was elected a Fellow

of the American Physical Society (APS).

McFadden was recognized "for fundamental insights into the effect of

fluid flow on crystal growth and for an innovative approach to phase field methods

in fluid mechanics." McFadden's interest in the study of crystal growth began

when he joined NIST in 1981. Since then he has published more than 100 papers

with colleagues in MSEL, as well as with researchers at external institutions

such as Carnegie Mellon University, Northwestern University, Rensselaer

Polytechnic, and the University of Southampton. The APS's Division of Fluid

Dynamics recommended the nomination. Fellowship in the APS is limited to no

more than one-half of one percent of APS membership. Presentation of the award

took place at the Annual Meeting of the Division of Fluid Dynamics held in San

Diego, November 18-20, 2001.

Raghu

Kacker was elected Fellow of the American Society for Quality and recognized at

the 55th Annual Quality Congress held in Charlotte, NC on May 6-9, 2001. He was

cited for pioneering work in the advancement of the application of the

statistical sciences, especially Taguchi methods, to quality, measurement

science, calibration and inter-laboratory comparisons.

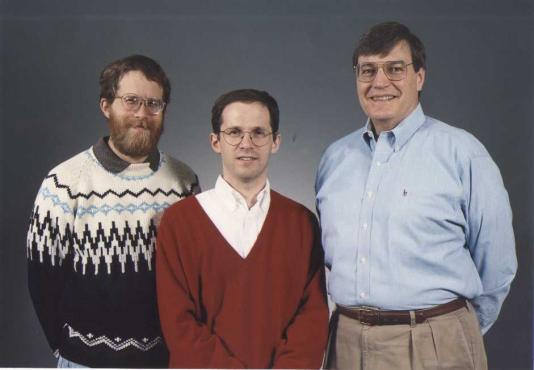

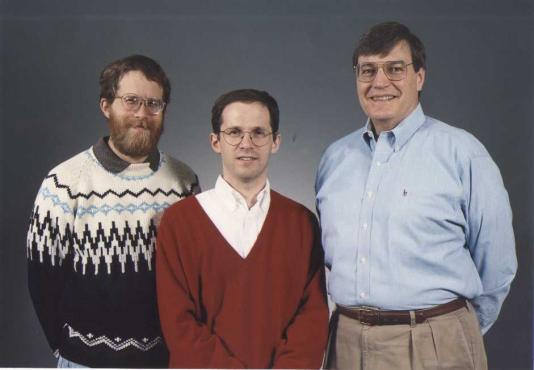

Raghu Kacker

(left) was elected a Fellow of the American Society for Quality, and

Geoffrey

McFadden (right) was elected a Fellow of the American Physical Society.

NIST

Awards. In December 2000, Stephen

Langer of MCSD, along with Ed Fuller and Andy Roosen of MSEL, received the NIST

Jacob Rabinow Applied Research Award. The Rabinow Award is presented yearly in

recognition of outstanding application of NIST research in industry. Langer, Fuller, and Roosen were honored for

the development of OOF, a system for the modeling of materials with complex

microstructures. Also in December 200,

a team of MCSD staff from the Scientific Applications and Visualization Group

was awarded a NIST Bronze Medal for their work in visualization of

Bose-Einstein condensates. The honorees were Judith Devaney, William George,

Terence Griffin, Peter Ketcham, and Steve Satterfield. They were cited for

their work with colleagues in the NIST Physics Lab to develop unique 3D color

representations of the output of computational models of Bose-Einstein

condensates. The visualizations illustrated properties of the condensates which

were previously unknown, and which have since been experimentally verified. The

pictures were selected as cover illustrations by Physics Today (Dec. 1999),

Parity magazine (Japanese, Aug. 2000), Optics and Photonics News (Dec. 2000),

and were featured in a title spread for an article in Scientific American (Dec.

2000).

Winners of the 2000 NIST Jacob Rabinow Applied

Research Award (left to right):

Andrew

Roosen (MSEL), Stephen Langer, and Edwin Fuller (MSEL).

Winners of the 2000 NIST Bronze Medal: (front, left to right) Steven

Satterfield, Peter

Ketcham, Terrence Griffin, (back, left to right) William George,

Judith Devaney.

Winners

of the 2001 Bronze medal: Roldan Pozo (left) and Ronald Boisvert (right)

In

December 2001, Ronald Boisvert and Roldan Pozo received a NIST Bronze Medal.

They were cited "for leadership in technology transfer introducing significant

improvements to the Java programming language and environment for scientific

computing applications."

ITL

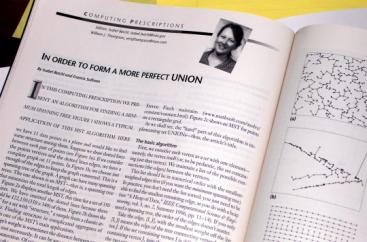

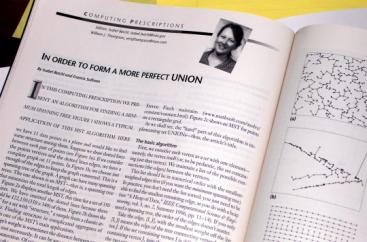

Awards. Isabel Beichl received the

first annual ITL Outstanding Publication Award in May 2001 in recognition of a

series of 11 tutorial articles on non-numeric techniques for scientific

computing published in Computing in Science and Engineering from

1997-2000. Beichl was the first winner

of this newly instated ITL award.

Five

MCSD staff members were among a group of 17 ITL staff named as joint recipients

of the Outstanding Contribution to ITL Award in May 2001. The award recognized members of the ITL

Diversity Committee. The MCSD awardees

were Judith Devaney (Chair), Isabel Beichl, Ronald Boisvert, Raghu Kacker, and

Bonita Saunders.

Isabel Beichl

won the first ITL Outstanding publication Award for a series of

11 tutorial

articles published in Computing in Science and Engineering.

MCSD

staff members continue to be active in publishing the results of their

research. This year 49 publications authored by Division staff appeared, 28 of

which were published in refereed journals. Twenty-one additional papers have

been accepted and are awaiting publication. Another 22 are under review. MCSD staff

members were invited to give 40 lectures in a variety of venues and contributed

another 30 talks to conferences and workshops.

Four

shortcourses on Java and LabView where provided by MCSD for NIST staff this

year. The Division lecture series

remained active, with 27 talks presented (five by MCSD staff members); all were

open to NIST staff. In addition, a

Scientific Object Oriented Programming User's Group, chaired by Stephen Langer,

was established. Six meetings of the

group have been held.

MCSD staff members also organize workshops,

minisymposia, and conferences to provide forums to interact with external

customers. This year, staff members were involved in organizing twelve external

events and three internal ones. For

example, a very successful workshop was held in late June to discuss the

current state of the OOF finite element program and to plan future

developments. Approximately 65 OOF

users and developers attended the two-day workshop from 5 countries, 9

companies, 18 universities, and 4 national labs. The workshop was co-sponsored by MCSD and the MSEL Center for

Theory and Computation in Material Science (CTCMS).

Software

continues to be a by-product of Division work, and the reuse of such software

within NIST and externally provides a means to make staff expertise widely

available. Several existing MCSD software packages saw new releases this year,

including Zoltan (grid partitioning, joint with Sandia National Laboratories),

OOMMF (micromagnetic modeling), OOF (material microstructure modeling), and TNT

(Template Numerical Toolkit for numerical linear algebra in C).

Tools

developed by MCSD have led to a number of commercial products. Examples from

two past Division projects are f90gl and IMPI.

F90gl is a Fortran 90 interface to OpenGL graphics. Originally developed

by William Mitchell of MCSD for use in NIST applications, f90gl was

subsequently adopted by the industry-based OpenGL Architecture Review Board to

define the standard Fortran API for OpenGL. NIST's reference implementation has

since been included in commercial products of Lahey Computer Systems, Compaq,

NASoftware, and Interactive Software Services. Several others are planned. MCSD staff facilitated the development of

the specification for the Interoperable Message Passing Interface (IMPI)

several years ago. IMPI extends MPI to

permit communication between heterogeneous processors. We developed a Web-based

conformance testing facility for implementations. Several commercial

implementations are now under development. Several companies, including

Hewlett-Packard and MPI Software Technologies demonstrated IMPI on the exhibit

floor of the SC'01 conference in Denver in November 2001.

Web

resources developed by MCSD continue to be among the most popular at NIST. The

MCSD Web server at math.nist.gov has serviced more than 38 million Web hits

since its inception in 1994 (9 million of which have occurred in the past

year). The Division server regularly handles more than 11,000 requests for

pages each day, serving more than 40,000 distinct hosts on a monthly

basis. Altavista has identified

approximately 10,000 external links to the Division server. The seven most

accessed ITL Web sites are all services offered by MCSD:

- NIST Math Portal

- Matrix Market

- Guide to Available Mathematical

Software

- Division home page

- ACM Transactions on Mathematical

Software

- Digital Library of Mathematical

Functions

- Template Numerical Toolkit

Division

staff members continue to make significant contributions to their disciplines

through a variety of professional activities. Ronald Boisvert serves as Chair

of the International Federation for Information Processing (IFIP) Working Group

2.5 (Numerical Software). He also serves as Vice-Chair of the ACM Publications

Board. Donald Porter serves on the Tcl Core Team, which manages the development

of the Tcl scripting language. Daniel Lozier serves as chair of the SIAM

Special Interest Group on Orthogonal Polynomials and Special Functions.

Division

staff members serve on journal editorial boards of eleven journals: ACM

Transactions on Mathematical Software (R. Boisvert and R. Pozo), Computing

in Science & Engineering (I. Beichl), Interfaces and Free Boundaries

(G. McFadden), Journal of Computational Methods in Science and Engineering

(M. Donahue), Journal of Computational Physics (G. McFadden), Journal

of Crystal Growth (G. McFadden), Journal of Numerical Analysis and

Computational Mathematics (I. Beichl and W. Mitchell), Journal of

Research of NIST (D. Lozier), Mathematics of Computation (D.

Lozier), SIAM Journal of Applied Mathematics (G. McFadden), SIAM

Journal of Scientific Computing (B. Alpert).

Division

staff members also work with a variety of external working groups. Ronald

Boisvert and Roldan Pozo chair the Numerics Working Group of the Java Grande

Forum. Roldan Pozo chairs the Sparse Subcommittee of the BLAS Technical Forum.

Michael Donahue and Donald Porter are members of the Steering Committee of

muMag, the Micromagnetic Modeling Activity Group.

In

2001 NIST Celebrated its Centennial. As

part of the celebration, NIST published a centennial volume entitled A

Century of Excellence in Measurements, Standards, and Technology: A Chronicle

of Selected Publications of NBS/NIST, 1901-2000. The publication highlights

approximately 100 highly significant NBS/NIST publications of the last century.

CRC Press published this book in the fall of 2001. Four of the highlighted

publications are associated with the work of ancestor organizations to MCSD:

- C. Lanczos,

An Iteration Method for the Solution of the Eigenvalue Problem of Linear

Differential and Integral Operators, Journal of Research of the

National Bureau of Standards 45 (1950), pp. 255-282.

- M. R.

Hestenes and E. Stiefel, Methods of Conjugate Gradients for Solving Linear

Systems, Journal of Research of the National Bureau of Standards 49

(1952), pp. 409-436.

- M.

Abramowitz and I. Stegun (eds.), Handbook of Mathematical Functions,

NBS Applied Mathematics Series 55, U.S. Government Printing Office, 1964.

- J. R.

Edmonds, Paths, Trees and Flowers, Canadian Journal of Mathematics 17

(1965), pp. 449-467.

R. Boisvert, D.

Lozier, D. O'Leary, and C. Witzgall developed vignettes in the published volume

describing these publications.

The

year 2002 also marks the 50th anniversary of the original

Hestenes-Stiefel paper on the conjugate gradient method cited above. This anniversary will be commemorated at a

conference on Iterative Methods for Large Linear Systems to be held at the ETH

in Zurich in February 2002. MCSD is a

joint sponsor of this conference.

MCSD

attempts to maximize the impact of its work.

In order to do this, it must continually assess the future needs of its

customers, as well as the mathematical and computational technologies that can

help meet those needs. This is the role

of strategic planning. Information

gathered in this way is used to set priorities for selecting projects, developing

new areas of expertise, and hiring new staff.

MCSD

assesses the needs of its customers in a variety of ways.

- One-on-one

interactions at the staff level.

- Attendance

at seminars, workshops, and conferences.

- Interactions with other organizations

at the management level.

- Monitoring of planning reports of

customer organizations, government organizations, and private think tanks.

- Participation in the development of

research proposals with customer organizations.

- Participation in formal strategic

planning efforts.

Advances

in mathematical and computational technologies are tracked in the course of a

variety of professional activities such as participation in workshops and

conferences, monitoring of technical magazines and journals, and consultation

with external technical experts.

Many

of these planning activities occur on a continuing basis during the year. A formal Division strategic plan was

developed in 1999 and will be revisited in 2002. The major themes identified in that plan were the following.

o

Measurement and Calibration for the Virtual Sciences

The ordinary industrial user of complex

modeling packages has few tools available to assess the robustness,

reliability, and accuracy of models and simulations. Without these tools and methods to instill confidence in

computer-generated predictions, the use of advanced computing and information

technology by industry will lag behind technology development. NIST, as the nation’s metrology lab, is

increasingly being asked to focus on this problem.

o

Evolving Architecture of Tools, Libraries, and

Information Systems for Science and Engineering

Research studies undertaken by laboratories

like NIST are often outside the domain of commercial modeling and simulation

systems. Consequently, there is a great

need for the rapid development of flexible and capable research-grade modeling

and simulation systems. Components of

such systems include high-level problem specification, graphical user

interfaces, real-time monitoring and control of the solution process, visualization,

and data management. Such needs are

common to many application domains, and re-invention of solutions to these

problems is quite wasteful.

The availability of low-cost networked

workstations will promote growth in distributed, coarse grain computation. Such an environment is necessarily

heterogeneous, exposing the need for virtual machines with portable object

codes. Core mathematical software

libraries must adapt to this new environment.

All resources in future computing

environments will be distributed by nature.

Components of applications will be accessed dynamically over the network

on demand. There will be increasing

need for online access to reference material describing mathematical

definitions, properties, approximations, and algorithms. Semantically rich exchange formats for

mathematical data must be developed and standardized. Trusted institutions, like NIST, must begin to populate the net

with such dynamic resources, both to demonstrate feasibility and to generate

demand, which can ultimately be satisfied in the marketplace.

o

Emerging Needs for Applied Mathematics

The NIST Laboratories will remain a rich

source of challenging mathematical problems.

MCSD must continually retool itself to be able to address needs in new

application areas and to provide leadership in state-of-the-art analysis and

solution techniques in more traditional areas.

Many emerging needs are related to applications of information

technology. Examples include VLSI

design, security modeling, analysis of real-time network protocols, image

recognition, object recognition in three dimensions, bioinformatics, and

geometric data processing. Applications

throughout NIST will require increased expertise in discrete mathematics,

combinatorial methods, data mining, large-scale and non-standard optimization,

stochastic methods, fast semi-analytical methods, and multiple length-scale

analysis.

This

year NIST embarked on an Institute-wide strategic planning process called NIST

2010. Four technical areas were identified

for emphasis.

- Nanotechnology

- Health care

- Knowledge management

- Homeland security

In addition, three

internal infrastructure areas where identified.

- People

- Customer focus

- Information technology support

MCSD

staff members are currently working with NIST-wide committees to understand

current NIST capabilities in these areas and develop specific plans. We will work to align our programs to be

able to support these efforts.

In

addition to these planning efforts, we have had additional extensive discussions

with management and staff of the NIST Physics Lab related to quantum

information, and the NIST Building and Fire Research Lab related to

computer-aided construction. Finally,

we have exchanged ideas with members of the government-wide Interagency Committee

on Extramural Mathematics Programs (ICEMAP), whose meetings we have

participated in this past year.

Two

new postdoctoral appointments were made during the past year. Katharine Gurski

joined MCSD in January 2001 as a National Research Council postdoctoral fellow

working with Geoffrey McFadden. She has

a Ph.D. in applied mathematics from the University of Maryland, and had a

previous postdoctoral appointment at the NASA Goddard Space Flight Center. She has been developing numerical methods

for the solution of axisymmetric boundary integral equations for applications

of in materials science, including dendritic growth. In October 2001 David Daegene Song also began a two-year

postdoctoral appointment with MCSD. A

recent graduate of Oxford University, where he received a Ph.D. in physics,

Song was associated with the Clarendon Laboratory’s Center for Quantum

Computation. He has been working on

issues related to entanglement swapping and the analysis of quantum algorithms.

Raghu

Kacker began a one-year detail from the ITL Statistical Engineering Division to

MCSD to begin investigation of the mathematical and statistical questions

associated with virtual measurement systems. He is also assisting with the DLMF

project.

Annette Shives, Secretary for MCSD’s Scientific

Applications and Visualizations Group, retired on September 28, 2001 after 24

years of government service. Yolanda Parker, formerly of the NIST Manufacturing

Engineering Laboratory, was hired to take over the administrative operations of

the group, as well as to perform new duties related to the operations of the

MCSD Visualization Lab.

Three

new foreign guest researchers began their terms in MCSD this year: Julien

Franiette, Aboubekre Zahid, and F. Pokam.

Each is working in the Scientific Applications and Visualization

Group. A. Samson and F. Pokam also

completed their terms during the year.

MCSD

provided support for nine student staff members on summer appointments during

FY 2001. Such appointments provide valuable experiences for students interested

in careers in mathematics and the sciences. In the process, the students can

make very valuable contributions to MCSD programs. This year's students were as

follows.

|

Name,

Affiliation

|

Supervisor

|

Project

|

|

E. Baer, Montgomery Blair

High School

|

A. Kearsley

|

Numerical and theoretical

properties of algorithms for the solution of linear systems were studied. In

particular, an application of P-adic arithmetic work of Morris Newman’s was

implemented.

|

|

B. Blaser, Carnegie Mellon

Univ.

|

B. Saunders

|

Development of graphics

for the DLMF project.

|

|

D. Cardy, Montgomery Blair

High School

|

F. Hunt

|

Explored methods for

distinguishing coding and non-coding regions of DNA sequences based on the

mutual entropy function. His work involved use of GenPatterns, a tool for

analyzing statistical patterns in DNA and RNA.

|

|

J. Carlson, Dartmouth

College

|

I. Beichl

|

Developed a probabilistic

algorithm to estimate the number of independent sets in a graph. She wrote a

Matlab program to do this and applied the results to computing the 2d and 3d

hard sphere entropy constants for cubic lattices.

|

|

D. Caton, Univ. of

Maryland

|

J. Devaney

|

Developing

an algorithm to recognize images in a large database of images with similar

texture characteristics.

|

|

Stefanie Copley

Univ. of Colorado

|

J. Filla

|

Vislab

and scientific visualization support, specializing in nonlinear video editing

and 3D stereo data presentation.

|

|

R. Jin, Montgomery Blair

High School

|

S. Langer

|

Apply image analysis

techniques to micrographs of materials microstructure, with the goal of

developing software for automatic grain boundary detection. The software will

be included in the OOF project.

|

|

E. Kim, Stanford Univ.

|

B. Saunders

|

Development of graphics for

the DLMF Project.

|

|

K. McQuighan, Montgomery

Blair High School

|

T. Kearsley

|

The theoretical properties

of algorithms for quantum computers were studied. In particular, the

application of Grover's method to difficult search problems was considered.

|

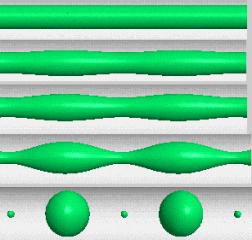

Charge density on a computed

diffusion-limited cluster aggregate.

2.1.Applied Mathematics

Alfred S. Carasso

In

work yet to be published, Alfred Carasso's direct blind deconvolution

techniques have been shown capable of producing useful results, in real time,

on a wide variety of real blurred images, including astronomical, Landsat and

aerial images, MRI and PET brain scans, and electron microscope imagery. A key

role is played by a class of functions introduced in the 1930's by Paul Lévy in

connection with his fundamental work on the Central Limit Theorem. The

potential usefulness in image processing of these so-called Lévy "stable" laws

had not previously been suspected.

In

the last several years, digital imagery has become pervasive in numerous areas

of applied science and technology, and digital image processing has matured

into a major discipline within Information Technology. Image processing is now

a vast research activity that lies at the intersection of Optics, Electronics,

Computer Science and Applied Mathematics. SPIE, IEEE, and SIAM are three major

scientific societies that support significant research in this area.

In

most cases, image acquisition involves a loss of resolution. This may come

about from imperfect optics, from the scattering of photons before they reach

their intended target, from turbulent fluctuations in the refractive index while

imaging through the atmosphere, from image motion or defocusing, or from a

combination of these and a myriad other small aberrations. The resulting

acquired image is typically blurred, and this blur, when known, can be

described by a point spread function (psf) that mathematically

characterizes the cumulative effect of all these distortions. In an idealized

imaging system, the psf is the Dirac delta function and has zero spread. In a

real system, there is always some point spread, and this delta function

typically becomes spread out onto some type of bell-shaped curve. There is

considerable interest in improving image resolution by removing some of this

blur through computer processing of the given blurred image.

Image

deblurring is one of several distinct topics within image

processing, (image compression is another), and it is one with considerable

mathematical content. Deblurring

involves deconvolution in an integral equation. This is a notoriously difficult

ill-conditioned problem in which data noise can become amplified and overwhelm

the desired true solution. Depending on the type of point spread function, this

deconvolution problem is mathematically equivalent to an ill-posed initial

value problem for a partial differential equation in two space variables. For

example, Gaussian psfs, which are ubiquitous in applications, lead to solving

the time-reversed heat equation. Other types of parabolic partial differential

equations, associated with nonlinear anisotropic diffusion, have recently been

advocated as generic image enhancement tools in image processing. That

approach, originating in France in the early 1990's, is computationally highly

intensive, and has yet to be evaluated. In another direction, probabilistic

methods based on Bayesian analysis together with Maximum Likelihood or Maximum

Entropy criteria, have long been used in Astronomy and Medical Imaging. These

are again nonlinear methods that must be implemented iteratively. A

characteristic feature of such probabilistic approaches is that large-scale

features in the image can typically be reconstructed after one or two-dozen

iterations, while several thousand further iterations, and several hours of CPU

time, are usually necessary to reconstruct fine detail.

In many cases, the psf describing the blur

is unknown or incompletely known. So-called blind deconvolution seeks to

deblur the image without knowing the psf. This is a much more difficult problem

in which ill conditioning is compounded with non-uniqueness. Most known

approaches to that problem are iterative in nature and seek to

simultaneously reconstruct both the psf and the deblurred image. As might be

expected, that iterative process can become ill behaved and develop stagnation

points or diverge altogether. As a rule, iterative blind deconvolution

procedures are not well suited for real-time processing of large size images of

complex objects.

Carasso's

work in image deblurring has focused on developing reliable direct

non-iterative methods, in which Fast Fourier Transform algorithms are used to

solve appropriately regularized versions of the underlying ill-posed parabolic

equation problem associated with the blur. When the psf is known, Carasso's

SECB method can deblur 512 by 512 images in about 1 second of CPU time on

current desktop platforms. Moreover, in a recent SIAM Journal on Applied

Mathematics paper, Carasso has developed two new direct blind deconvolution

techniques, the BEAK method and the APEX method. These methods are based on

detecting the signature of the psf from appropriate 1-D Fourier analysis of the

blurred image. This detected psf is then input into the SECB method to obtain

the deblurred image. When applicable, either of these two distinct blind

methods can deblur 512x512 images in less than a minute of CPU time, which

makes them highly attractive in real-time applications.

The

APEX method is predicated on a class of shift invariant blurs, the class G,

which can be expressed as a finite convolution product of radially symmetric

two-dimensional Lévy stable density functions. This class includes Gaussians,

Lorentzians, and their convolutions, as well as many other kinds of bell-shaped

curves with heavy tails. The motivation for using the class G as the

framework for the APEX method, lies in previously unrecognized basic work by C.

B. Johnson, an electronics engineer who, in the 1970's, discovered non-Gaussian

heavy-tailed psfs in a wide variety of electron optical imaging devices. In

fact, Carasso has been energetic in making Johnson's work more widely known

within the imaging research community, has corresponded with Johnson, and has

succeeded in drawing the attention of Mandelbrot, Woyczynski, and Nolan, three

eminent specialists on Lévy processes, to Johnson's seminal work. Very

recently, Woyczynski has interviewed Johnson in connection with Woyczynski's

forthcoming book on Lévy processes in the physical sciences.

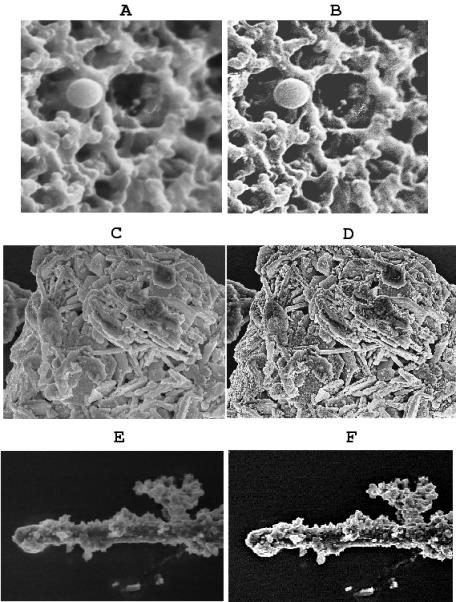

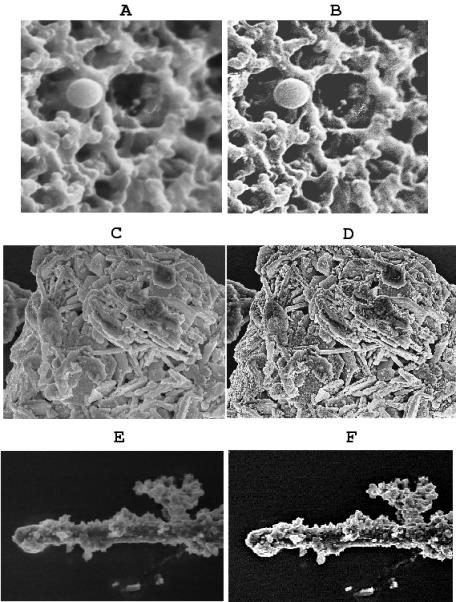

APEX method in image sharpening

(A)

Original transverse PET brain image. (B) Enhanced PET image. Bright spots

indicating areas of the brain responding to applied external stimuli were

barely visible in original image. Here, beta=0.284. (C) Original scanning

electron micrograph of mosquito's head showing compound eye. (D) Enhanced image

shows increased contrast and brings eye into sharper focus. Here, beta=0.157.

(E) Original F-15 plane image. (F) Enhanced image brings out terrain features

and condensation trails behind aircraft. Here, beta=0.107.

Lévy

densities are characterized by an exponent beta that expresses the degree of

departure from the Gaussian density, for which beta=1.0. In physical

applications where Lévy densities appear, values of beta less than 0.5 are generally

rare. While not all images can be significantly improved with the APEX method,

there is a wide class of images for which APEX processing is beneficial. These

images have the property that their 1-D Fourier transform traces are globally

logarithmically convex. When the APEX method is applied to such an image, a

specific value of beta is detected. Typical APEX-detected values of beta

are on the order of 0.25. The physical origin of such beta values, if any, is

uncertain. However, it is remarkable that useful sharpening of imagery from a

wide variety of scientific and technological applications can be accomplished

with such heavy-tailed psfs. The appearance of low-exponent stable laws in the

present context is of great interest to specialists on Lévy processes. The APEX method is based on ill-posed

continuation in diffusion equations involving fractional powers of the

Laplacian. Mathematically, such an approach differs fundamentally from

currently more popular techniques based on solving well-posed nonlinear

anisotropic diffusion equations. Interestingly, the APEX method generally

produces sharper imagery, at much lower computing times.

Future

work will explore more fully applications of this technique to NIST imaging

problems, as well as to selected problems in other areas.

Alfred S.

Carasso

David S. Bright

(NIST CSTL)

András E. Vladár

(NIST MEL)

Scanning

electron microscopes (SEM) are basic research tools in many of NIST's programs

in nanotechnology. Moreover, considerable expertise resides at NIST on the

theory behind these instruments, as well as on the analysis and interpretation

of SEM imagery. David Bright has created the LISPIX image analysis package and

has used it to automate electron microscopes. András Vladár is the SEM Project

Leader in the Nanoscale Metrology Group, and he has helped define and implement

the basic standards for the measurement and monitoring of electron microscope

imaging performance. That expertise was

vital to the success of this project, which extended over a two-year period and

involved well over 1 gigabyte of processed imagery.

A

major concern in scanning electron microscopy is the loss of resolution due to

image blurring caused by electron beam point spread. The shape of that beam can

change over time, and is usually not known to the microscopist. Hence, the

point spread function (psf) describing the blur is generally unknown.

Nevertheless, there is great interest in improving resolution by reducing this

blur. The images we are concerned with come from scanning electron beam

instruments such as the field emission gun scanning electron microscope

(FEGSEM), a high-resolution instrument, and the environmental scanning electron

microscope (ESEM), a lower resolution instrument with more flexible sample

handling capability. SEM micrographs

are typically large size images of complex objects.

Real-time

blind deconvolution of SEM imagery, if achievable, would significantly

extend the capability of electron microprobe instrumentation. Previously gained

experience with the APEX method on images from very diverse imaging modalities,

naturally suggests use of this technique. However, SEM imaging differs from

other electron-optic imaging, in that the instrument transform I

that converts a sample s(x,y) into an image i(x,y) has a

nonlinear component, M, which describes the details of the nonlinear

interaction between the electrons and the material. M is usually studied

by Monte Carlo simulations applied to electron trajectories, but is not

readily invertible. The second component of I, call it q,

describes blurring due to the electron beam point spread, along with some of

the instrument's electronics. That component is often represented as a

convolution, so that the SEM micrograph i(x,y) is the convolution of q

with M(s(x,y)). The APEX method is a linear deconvolution technique

predicated on a restricted class of blurs, the class G, consisting of

finite convolution products of radially symmetric Lévy probability density

functions. It is by no means obvious

that the APEX method is applicable to SEM imagery.

Nevertheless,

when the APEX method was applied to a large variety of original SEM

micrographs, the method was and found to be quite useful in detecting and

enhancing fine detail not otherwise discernible. Several examples are shown in

the accompanying Figure. In addition, quantitative sharpness analysis of ‘ideal

sample’ micrographs, using a methodology originally developed by the NIST Nanoscale

Metrology Group to monitor SEM imaging performance, shows that APEX processing

can actually produce sharper imagery than is achievable with optimal

microscope settings. On such ideal sample micrographs, sharpness increases on

the order of 15% were obtained as a result of APEX processing. A crucial

element in this work was the marching backwards in time feature of the

APEX method, which allows for deconvolution in slow motion. The APEX

method sharpens the image, while simultaneously increasing contrast and

brightness, by restoring some of the high frequency content that had been

attenuated in the course of imaging the sample. Slow motion deconvolution

allows the user to terminate the APEX process before brightness, contrast, or

noise, becomes excessive.

As

in all inverse problems, successful use of the APEX method requires a-priori

knowledge about the solution. Here, such prior knowledge takes the form of

training and experience on the part of the microscopist, whose judgment is

called upon to distinguish genuine features in the presence of noise and

visually select the best reconstruction. Several experienced NIST microscopists

were involved in evaluating the merits of APEX processed imagery.

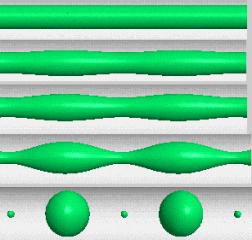

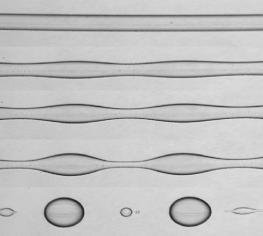

Real time APEX processing of Scanning Electron Microscope Imagery

Left column: Original SEM micrographs, Right column: After APEX

processing. (A) Fly ash particle from Nuclepore filter. (C) particle from

crystalline mercury compound. (E) dirt particle from air filter. APEX

processing increases contrast and brightness as it sharpens the image, and

brings out fine scale detail not otherwise discernible.

In

the adjoining Figure, the left column contains examples of original SEM

micrographs that were input into the APEX method, while the right column

contains the corresponding APEX images. All original micrographs were input as

8-bit 512 by 512 images, although smaller sub images are displayed in some cases.

These images are part of a wide class of SEM images with globally

logarithmically convex 1-D Fourier transform traces. Image (A) is a micrograph

of a 2-micron diameter fly ash particle on a nuclepore filter. That image was

scanned from an old Polaroid print taken by John Small (NIST), in the 1970's,

on a Cambridge SEM at the University of Maryland. Imperfections on the Polaroid

print are detected in the APEX image (B), along with enhancing the texture of

the sample. Some of that texture may be due to the print rather than to the

sample itself. Moreover, the scratch near the upper right corner in image (B)

is not discernible in image (A). This example is a useful indicator of the

value of APEX processing. Presumably, actual imperfections or small defects in

some other sample would have been detected equally well. Also, the APEX image

(B) has more depth than the original image, in that the structure in the lower

left quadrant now appears closer to the viewer than does the rest of the image.

Image

(C) is a 20-micron field of view micrograph of a particle from a complex

multi-form crystalline compound of mercury. This particular sample has very

complex and varied morphology, in addition to surface dusting or decoration of

fine particles almost everywhere. This becomes clearly evident only in the APEX

image (D), which contains substantially more information than does image (C).

Also, the three-dimensional structure of the particle is particularly well

rendered in image (D). Image (E) is a small portion of a 250-micron field of

view micrograph of a dust particle from an air vent, consisting of a complex

agglomeration of biological and mineral particles. Very striking APEX

enhancement is apparent in image (F).

As

in the previously mentioned APEX applications, low values of the Lévy exponent

beta, typically on the order of 0.25, were detected in these SEM micrographs.

Future work will examine possible links between these values of beta and the

physics of electron microscopy. Plans are also underway to incorporate APEX processing

into the LISPIX package, a NIST-developed image analysis tool that is widely

used within the NIST Laboratories. In another direction, the possible use of

APEX methodology to produce a new quantitative measure of SEM imaging

performance is being explored.

James Lawrence

Computational

geometry and image analysis techniques have been applied to photographic images

of polymer dewetting under various conditions in order to model the evolution

of these materials. This work is in collaboration with MSEL which has massive

amounts of data as a result of combinatorial experimentation and which is in

great need of automatic techniques for analysis. Methods and software have been

devised to evaluate areas of wetness and dryness for their geometric properties

such as deviation of holes from perfect circularity and distribution of holes

centers. We computed Voronoi diagrams of the initial hole centers and we